A few weeks ago I had some sort of a urge to try game and/or graphics development on Android. I've been doing some Android application development lately so I had all the needed tools setup already. However, what I wanted was some cross-platform engine or framework so that I could develop mostly on desktop. Working with emulators and physical devices is tolerable when doing regular app development and of course you want to use UI framework native for the platform. For games or other hardware stressing apps I really wouldn't like to work like that. Emulator is useless as it doesn't support OpenGL ES 2.0 and is generally very slow. And it's no pleasure with physical device either, grabbing it all the time off the table, apk upload and installation takes time, etc. So I started looking for some solutions.

The Search

Initially, I considered using something like Oxynege. It uses Object Pascal syntax, can compile to .NET bytecode and also Java bytecode (and use .NET and Java libs). However, I would still need to use some framework for graphics, input, etc. so I would be locked in .NET or Java world anyway. I'm somewhat inclined to pick up Oxygene in the future for something (there's also native OSX and iOS target in the works) but not right now. Also, it's certainly not cheap for just a few test programs. Then there is Xamarin - Mono for Android and iOS (MonoGame should work here?) but even more expensive. When you look Android game engines and frameworks specifically you end up with things like AndEngine, Cocos2D-X, and libGDX.

After a little bit of research, I have settled for libGDX. It is a Java framework with some native parts (for tasks where JVM performance maybe inadequate) currently targeting desktop (Win/OSX/Linux), Android, and HTML5 (Javascript + WebGL in fact). Graphics are based on OpenGL (desktop) and OpenGL ES (Android and web). Great thing is that you can do most of the development on desktop Java version with faster debugging and hot swap code. One can also use OpenGL commands directly which is a must (at least for me).

The Test

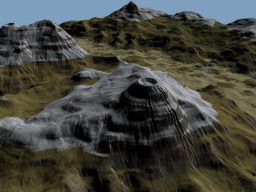

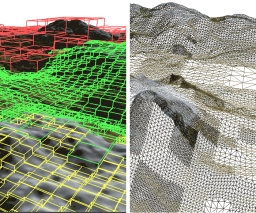

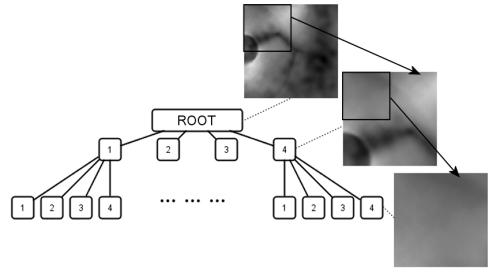

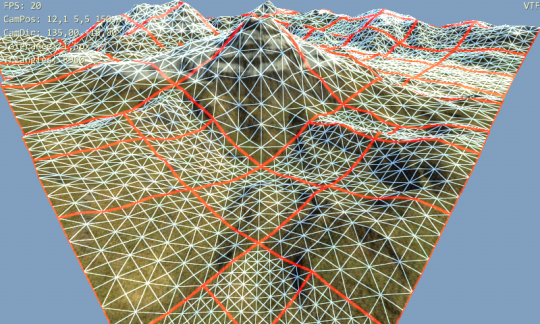

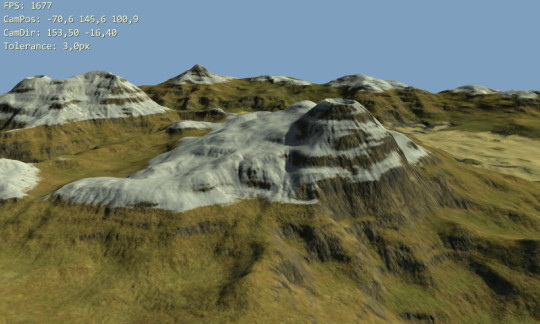

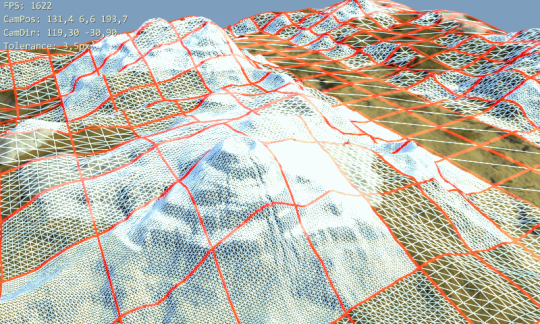

I wanted to do some test program first and I decided to convert glSOAR terrain renderer to Java and OpenGL ES (GLES). The original is written in Object Pascal using desktop OpenGL. The plan was to convert it to Java and OpenGL ES with as little modifications as possible. At least for 3D graphics, libGDX is relatively thin layer on top of GLES. You have some support classes like texture, camera, vertex buffer, etc. but you still need to know what's a projection matrix, bind resources before rendering, etc. Following is the listing of a few ideas, tasks, problems, etc. during the conversion (focusing on Java and libGDX).

Project Setup

Using Eclipse, I created the projects structure for libGDX based projects. It is quite neat actually, you have a main Java project with all the shared platform independent code and then one project for each platform (desktop, Android, ...). Each platform project has just a few lines of starter code that instantiates your shared main project for the respective platform (of course you can do more here).

Two problems here though. Firstly, changes in main project won't trigger rebuild of the Android project so you have to trigger it manually (like by adding/deleting empty line in Android project code) before running on Android. This is actually a bug in ADT Eclipse plugin version 20 so hopefully it will be fixed for v21 (you star this issue).

Second issue is asset management but that is easy to fix. I want to use the same textures and other assets in version for each platform so they should be located in some directory shared between all projects (like inside main project, or few dir levels up). The thing is that for Android all assets are required to be located in assets subdirectory of Android project. Recommended solution for libGDX here is to store the assets in Android project and create links (link folder or link source) in Eclipse for desktop project pointing to Android assets. I didn't really like that. I want my stuff to be where I want it to be so I created some file system links instead. I used mklink command in Windows to create junctions (as Total Commander wouldn't follow symlinks properly):

d:\Projects\Tests\glSoarJava> mklink /J glSoarAndroid\assets\data Data

Junction created for glSoarAndroid\assets\data <> Data

d:\Projects\Tests\glSoarJava> mklink /J glSoarDesktop\data Data

Junction created for glSoarDesktop\data <> Data

Now I have shared Data folder at the same level as project folders. In future though, I guess there will be some platform specific assets needed (like different texture compressions, sigh).

Java

Too bad Java does not have any user defined value types (like struct in C#). I did split TVertex type into three float arrays. One for 3D position (at least value type vectors would be nice, vertices[idx * 3 + 1] instead of vertices[idx].y now, which is more readable?) and rest for SOAR LOD related parameters. Making TVertex a class and spawning millions of instances didn't seem like a good idea even before I began. It would be impossible to pass positions to OpenGL anyway.

Things like data for VBOs, vertex arrays, etc. are passed to OpenGL in Java using descendants of Buffer (direct) class. Even stuff like generating IDs with glGen[Textures|Buffers|...] and well basically everything that takes pointer-to-memory parameters. Of course, it makes sense in environment where you cannot just touch the memory as you want. Still it is kind of annoying for someone not used to that. At least libGDX comes with some buffer utils, including fast copying trough native JNI code.

Performance

Roots of SOAR terrain rendering method are quite old today and come from the times when it was okay to spend CPU time to limit the number of triangles sent to GPUs as much as possible. That has been a complete opposite of the situation on PCs for a long time (if did not have old integrated GPU that is). I guess today that is true for hardware in mobile devices as well (at least the new ones). And there will also be some Java/Dalvik overhead...

Anyway, this is just an exercise so it the end result may very well be useless and the experience gained during development is what counts.

OpenGL >> GLES

Continue reading to Post to Post Links II error: No post found with slug "1030" which focuses on OpenGL to OpenGL ES transition.

Deskew v1.30

Deskew v1.30